AI-Powered Photo Culling (B2B)

An AI side panel in Adobe Bridge that learns a photographer’s style and explains its reasoning.

Deepening my AI/UX skill set through a portfolio-ready prototype exploring human-centered AI design.

Key Outcomes

Projected performance metrics demonstrating efficiency, accuracy, and trust.*

* Target figures pending implementation and user testing.

Overview

Photographers face thousands of images per shoot, leading to hours of culling and fatigue. This project shows how AI can cut the repetition and give them more time to focus on shooting.

My Role in 2025

Used LLMs (ChatGPT Plus, Gemini Pro, Claude Pro, Perplexity Pro) and other AI tools to progressively generate, iterate, and refine project deliverables, creating workflow feedback loops with increased speed and quality.

Earned certification in Master UX Design for AI.

Applied AI design principles to streamline workflows in Adobe Bridge.

Balanced automation with human control and explainability

Credential

Completed Aug 2025.

A 5-week program focused on Conversational AI, Multimodal AI, and Human-Centered AI design.

Project Selection

Reasons that I chose this project:

Targets a large viable market

Offers monetization potential for Adobe

Aligns with my portfolio’s B2B orientation

Aligns with my photography hobby for 37 years, and I know the problem space well

Designing for Human-AI Alignment

I identified target users with their Jobs To Be Done (JTBD) to ensure we design for real human problems and not just “AI-washing”.

User & Jobs To Be Done

Persona: Phil, event & travel photographer, represents pro users managing thousands of images per shoot.

Goal: Save time without losing creative control.

Pain points (mine too): Late nights culling near-duplicates, renaming files, and batch-editing just to stay organized.

JTBD: Organize folders, select best shots, apply quick batch edits, and rename files consistently.

AI Learnings

Used NotebookLM to synthesize pain points and map them to JTBD.

Learned to frame AI as augmentation, not automation, ensuring photographers keep control.

Project Definition & Use Case

I used NotebookLM to draft and refine a new Project and Use Case Definition, also summarized below in the new sticky notes.

Project: an AI assistant to cull, rename, organize, and first-pass edit photos; augmenting, not replacing, creative control.

Cuts 50%+ of repetitive tasks while building trust through explainability, personalization, and control.

Researching & Validating Adobe Bridge

Perplexity Pro’s research showed that photographers use Lightroom and various AI tools for photo culling. But for Phil and other users, research showed this as an opportunity to streamline the culling process with Adobe Bridge.

Opportunity:

Event photographers shooting 2,000-8,000 images per session with 4-6 hour manual culling processes

Commercial studios managing 50,000 + image libraries across multiple drives and projects

Market size: estimated 100,000 + professional Bridge users globally based on Adobe's 32.5M Creative Cloud base

This represents an underserved but substantial market segment with clear, unmet needs

Additional Perplexity Pro research supported building the assistant into Bridge:

15-20% of professional photographers prefer direct folder control and non-catalog workflows, especially for high-volume events and commercial shoots

Bridge users face unique bottlenecks in Al tooling and most solutions target Lightroom's catalog-based workflows

This represents an underserved but substantial market segment with clear, unmet needs

I used ChatGPT to parse the data and generate the initial table below, and I iterated the code using Gemini Pro.

| Bridge vs Lightroom Comparison | ||

|---|---|---|

| Feature / Characteristic |

|

|

| Import Step | ✅ No import needed | ❌ Required before culling |

| Catalog System | ✅ No catalog – uses file system; no files duplicated | ❌ Catalog required; files duplicated |

| Risk of Catalog Corruption | ✅ None – no catalog means no corruption risk | ❌ Yes – if files moved outside |

| User Control | ✅ High – real-time file sync | ❌ Limited – external moves break links |

| Speed to Start Culling | ✅ Fast – no setup | ❌ Slower – import + previews |

| Metadata Handling | ✅ Saved to file or XMP | ❌ Stored in catalog |

| Folder Organization | ✅ User-controlled via OS | ❌ Organized via collections |

| File Access | Direct from folders | Through collections |

| Ideal Use Case | Manual culling, fast edits | Large photo libraries |

| Learning Curve | ✅ Lower – file browser UX | ❌ Higher – catalog behavior |

| AI Integration Potential | ✅ Ideal – flexible metadata workflows | ❌ Limited – catalog friction |

User Journey

I created a new User Journey Map in Smaply, which proved to be quicker and more manageable than the older journey map in BuildUX.

I focused on Stage 3 - Culling (in purple highlight), as research indicated it was a major pain point that significantly impacted ROI related to users' emotions before and after AI implementation.

AI Capability Mapping

NotebookLM parsed data for AI Capability Mapping, aligning the photo culler's features with relevant AI technologies. Our lesson plans covered various AI technologies, giving examples for each. With NotebookLM, I created the mind map below, and even more detail shows my AI Capability Map Matrix document.

By consistently using the same AI terms (like Recommendation Systems and NLU) when talking to developers, I aim to improve communication, build trust, and ensure everyone is on the same page.

Purple box indicates the AI technologies used in this project. Gold box indicates those features and benefits associated to AI technologies.

Conversational & Multimodal AI

Conversational AI

Adobe Bridge is powerful but can overwhelm new users with its many clicks and keystrokes. Culling photos can be complex and time-consuming, making it a perfect task for AI.

I decided to use a conversational chatbot as a sidekick, because it could help photographers see their images while using the sidekick to culling photos, pick the top 10% for review, etc.

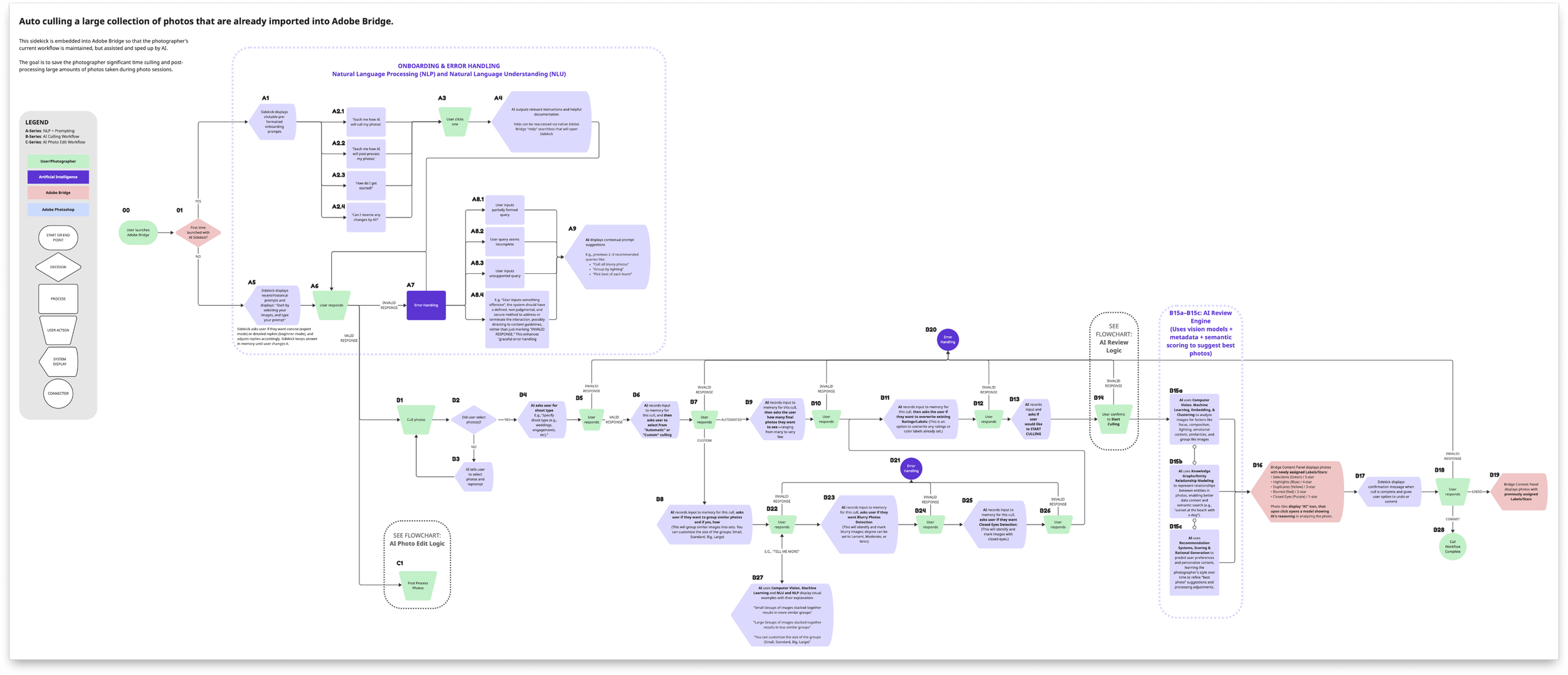

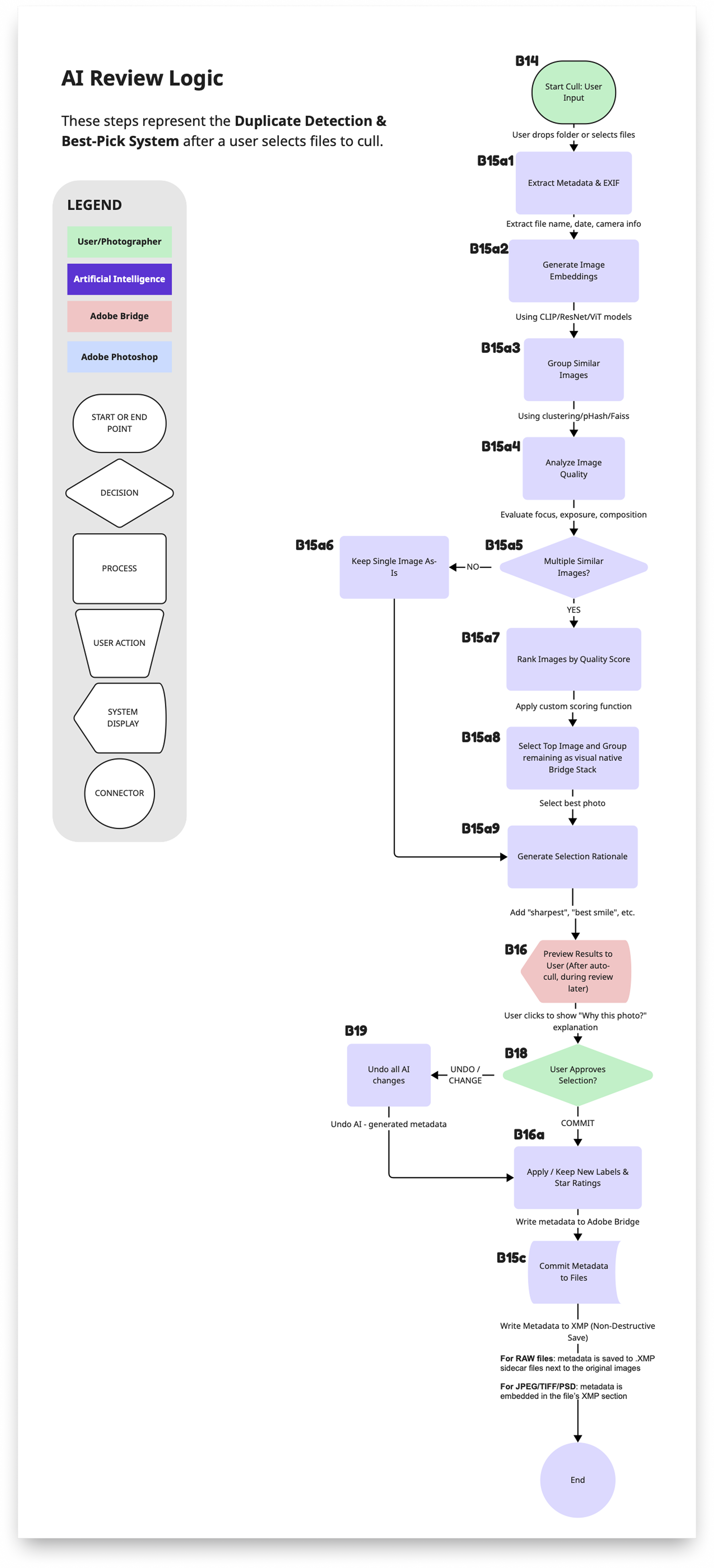

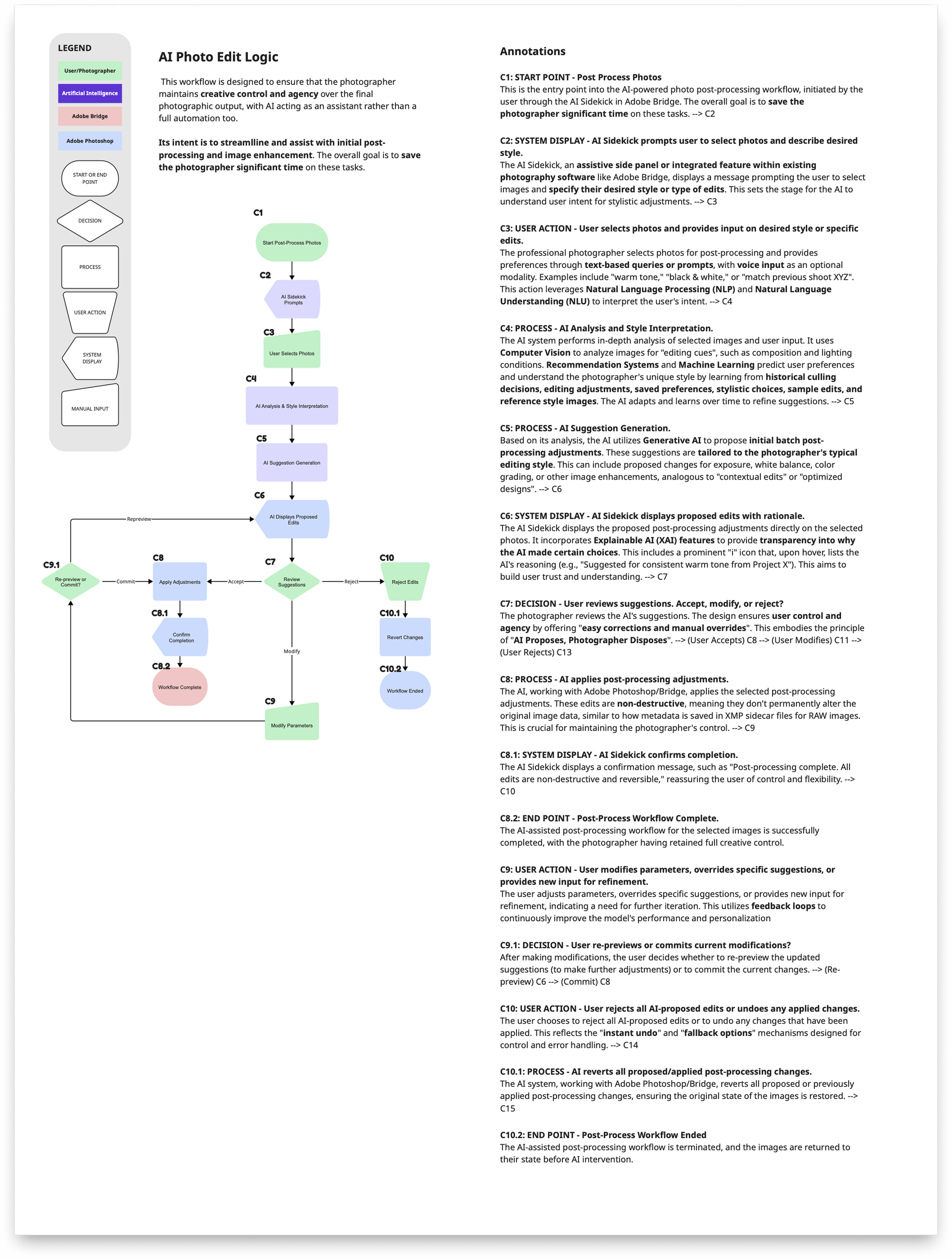

Chatbot behavior is non-deterministic (unpredictable and doesn’t follow a set path), so I created flowcharts to map out:

Step-by-step interaction sequences

Decision points and system responses

Error states and recovery paths

Key moments of AI assistance

Design principles and user mental model considerations

These flowcharts became a solid base for my VoiceFlow conversational prototype.

How I Used Multiple AI Tools To Create Flowcharts

Upload Context: In NotebookLM (NBLM), I uploaded all the source files for context, including lesson notes and all previous assignments.

Prompt: I prompted NBLM to create a flowchart explaining the process in step 5 (Post-Process) of my user journey map. I instructed it to prioritize accuracy before it provides the output.

Output: NBLM initially presented the flow in text form, which I then requested to convert for Miro visualization.

Redo: NBLM gave me output that Miro converted into sticky notes. I didn’t want a sticky notes format, so I tried again, as detailed in step 5 below.

Miro AI: In Miro, I utilized the AI “Diagram or mindmap” feature, selected the “Flowchart” option, and pasted the NBLM output into the prompt box.

Create Flowchart: Miro generated the visual flowchart with the exception of the colors, based on the format of the others that I started in the Miro canvas.

Correct & Stylize: In Miro I reviewed and corrected any errors, adjusted the orientation, and added colors as needed.

Annotate: NTBM also generated annotations, which I reviewed and added next to the updated diagram in Miro.

Hypothesis

NoteBookLM also to effectively identified key areas for our hypothesis, referencing my past assignments and lessons for context. These hypotheses guided my design and helped me select the use cases to highlight in the prototype.

User Needs & Expectations

Hypothesis 1: Photographers will readily accept AI suggestions if the system transparently explains the reasoning behind its choices (e.g., “This photo is suggested because of its sharp focus and leading lines”).

Hypothesis 2: Users will adopt the AI solution if it demonstrably reduces the average time spent on culling, renaming, and organizing by 50%.

Hypothesis 3: Users will appreciate personalized suggestions for culling and processing that align with their specific style and preferences, which the AI learns over time.

AI Interaction & UX

Hypothesis 4: An assistive side panel or integrated feature within existing software (like Adobe Bridge) will lead to higher adoption compared to a standalone application.

Hypothesis 5: Multimodal interaction (visual exploration, text-based queries, and optional voice input) will enhance the efficiency and user satisfaction of tasks like culling and renaming.

Hypothesis 6: Users will prefer AI acting as a “smart assistant” that augments their workflow rather than a full automation tool that takes over creative control.

Workflow & Trust

Hypothesis 7: Providing easy corrections and manual overrides for AI suggestions will build user trust and ensure creative control.

Hypothesis 8: The AI’s ability to identify patterns not easily visible to the human eye will provide valuable insights, aiding in efficient selection and organization.

Hypothesis 9: Clearly communicating privacy controls and data usage policies will mitigate user concerns regarding AI analyzing sensitive content.

AI Design Patterns

Time to bring it all together and implement appropriate AI Design Patterns that adhere to relevant Design Principles for this AI Photo Culler, and how to integrate it with the existing Adobe Bridge application.

Human-Centered AI (HCIA) Design Principles

HCAI Design Principles are crucial for developing AI projects that focus on user needs, contexts, and emotions. They ensure AI systems are not just advanced but also user-friendly, reliable, and trustworthy. These principles are key to the success of AI initiatives, as many projects fail to deliver real value due to inadequate user focus.

From the lesson readings I drafted Design Principles that would:

Serve as a foundation for the product team to align on ethical standards and user and business needs, helping to avoid conflicts and encourage responsible AI development.

Provide a clear development roadmap, creating a shared understanding and consistent decision-making criteria.

Establish UX and functionality guidelines to aid in making informed choices about features and design.

Prevent redesign and redevelopment issues caused by unclear or missing basic guidelines.

Design Principle 1: User Control & Agency

AI Proposes, Photographer Disposes: Keep the human in the driver’s seat—every automation is reversible and explained. Builds appropriate trust.

Design Principle 2: Transparency & Explainability

Show the Why, Fast: Surface a one‑line rationale and confidence badge for each suggestion so users see model thinking without digging.

Default to on‐device processing: Clearly list data used and allow opt‐out of cloud training.

My flowcharts and Project Definition guided the agents responses regarding concerns with privacy and option out of cloud training. The video below tested how Sensei might respond.

Turn on sound to hear voiceover

Design Principle 3: Usefulness & Task Completion

Speed with Safeguards: Target 50 % workflow time savings while enforcing non‑destructive edits and instant undo.

Safeguards in place: all changes are non-destructive and can be undone before and after committing the cull.

Design Principle 4: Human-AI Alignment

Learn My Style, Then Adapt: Personalization improves after every shoot, but users can reset or export their style profile at any time.

Users can rate every AI suggestion and approve, reject, show more images like this, or never show images like this.

Design Principle 5: Error Handling & Recovery

No Dead Ends, Always a Fallback: Always enable workflow continuity through graceful degradation, offline fallbacks, and clear recovery from AI misfires.

Vibe Coding & Prototypes

Before finalizing a prototype method I tried several vibe coding tools to see how well they replicated Adobe Bridge’s UI. Choosing the right tools is key to achieving faster and better results, so I like to try them out before committing. I’m excited to see how they evolve and improve in the future.

Below, I share my experiences with each tool through Loom videos. General summary for them all:

Vibe code tools help generate ideas or create initial apps, but designers are still essential for refining the output.

Each tool requires extra effort and tokens to improve the design to align with the final vision.

For pixel-perfect accuracy or to replicate an existing app, Figma currently remains my top choice for visual control.

Unmute the sound on each video to hear my narration.

Claude

Claude

Claude seems promising with the ability to upload actual photos and more functionality than the others.

v0

v0

I found v0 a potential tool to continue iterating for user research, but not for a pixel-perfect prototype recreating Adobe Bridge.

Loveable

Loveable

Loveable wasn’t great - I prompted it to match some Adobe Bridge screenshots, but it was far off.

Magic Patterns

Magic Patterns

Magic Patterns was an interesting experiment, but I found some limitations in functionality.

Figma Make

Figma Make

I was unable to replicate Adobe Bridge with Figma Make, and ultimately decided to pivot back to regular Figma for more visually accurate prototype.

VoiceFlow

VoiceFlow

Voiceflow’s functional Agent simulated the conversational chatbot portion of the design.

I used this to gauge how the conversation flowed, how AI behaved and interpreted user context, where AI needs guardrails, and when to trust it’s output.

This shaped my Figma demo prototype.

Figma Prototype (Mid-Fidelity)

Figma Prototype (Mid-Fidelity)

I chose to build an interactive demo prototype in Figma, using screenshots of the real Adobe Bridge application combined with Adobe Spectrum Design System components. This afforded me the most control, visual parity to Bridge, and accurate design intent. I’ll use this for the initial round of user testing.

Click below to interact with prototype (works best on desktop or laptop)

Reflection + Next Steps

What I Learned About Designing for AI

AI is merely another tool that can help users achieve their goals if used effectively.

To create effective solutions, we must first understand our users and the challenges they face.

Key AI design principles (User Control and Agency, Transparency & Explainability, Usefulness & Task Completion, Human-AI Alignment, Error Handling & Recovery) are essential for the success of AI products. Ignoring these principles may lead to low user adoption.

What I’d Explore Next

I’m excited to use AI to improve artifact creation, such as journey maps, design principles, flow charts, and UX flows.

I look forward to explore vibe coding with product definitions, refining designs in Figma, and iterating into prototypes.

My Interest in AI/ML Product Design

AI and ML have reignited my passion for Product and UX design. The efficiency AI brings will enable me create amazing tools and products, marking the start of our journey toward super-intelligence.

However, AI and ML still needs human oversight to review and refine their outputs, and I believe Product Designers are ideally suited for this role. We connect complex AI technologies with human-centered experiences, aligning user mental models with AI to ensure adoption.